IO Virtualization can be carried on in three implementation layers: System call (from application to Guest OS), Driver call (interface between Guest OS and IO device drivers of Guest OS) and IO Operation (interface between IO device drivers of Guest OS and hypervisor of host (or VMM – Virtual Machine Monitor)). In case of IO virtualization at driver call layer, the IO device driver in Guest OS is modified and it is the base of paravirtualization. At this layer, an optimization method of IO virtualization is created, which is virtio. Initially, the virtio backend is implemented in userspace, then the abstraction of vhost appears, it moves the virtio backend out and puts it into KVM. Finanlly, DPDK (Data Plane Development Kit) takes the vhost out of KVM and puts it into a separate userspace.

1. VIRTIO

Virtio is an important element in paravirtualization support of kvm.

By definition: Virtio is a virtualization standard for network and disk device drivers where just the guest's device driver "knows" it is running in a virtual environment, and cooperates with the hypervisor. This enables guests to get high performance network and disk operations, and gives most of the performance benefits of paravirtualization.(Source: http://wiki.libvirt.org/page/Virtio)

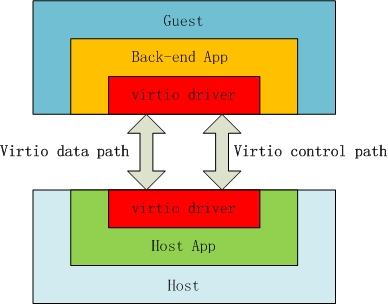

Picture 1. Virtio Implementation. (Source:www.myexception.cn)

In picture [1], virtio implementation has two side of virtio drivers, guest OS side so called front-end driver and QEMU side which is back-end driver. Besides, the host implementation is laid in userspace (qemu) so that there is no need of driver in host, only the guest OS side awares of virtio driver (front-end driver). It may be the reason that in many graphs of virtio, we only see the virtio driver on the side of guest OS ?. More information of virtio can be taken from this link: http://www.ibm.com/developerworks/library/l-virtio/

2. Vhost

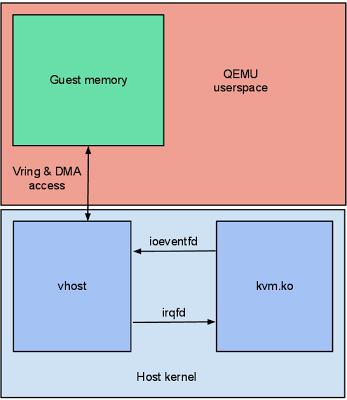

In the initial structure of paravirtualization, virtio backend lays inside of qemu, but when vhost appears the virtio back-end now is inside kernel. The abstraction of virtio back-end inside kernel is vhost [picture 2].

Before vhost implementation (only kvm with/without virtio), both of data plane and control plane are done in qemu (user space). Any data transfer must go through qemu and qemu emulates I/O accesses back and forth to guest. In this case, virtio emulation code is implemented in qemu (user space). When vhost is born, it puts the virtio emulation code into kernel space. Vhost driver itself is not a self-container virtio device virtualization, it only relates to virtqueue (A part of memory space shared between qemu and guests to accelerate data access) . It means that user space still handles the control plane and kernel takes care of data plane.

Picture 2. Vhost Implementation. (Source: http://blog.vmsplice.net/2011/09/qemu-internals-vhost-architecture.html)

3. DPDK + OVS

All the theory about DPDK, you can search in its official website (http://dpdk.org/doc/guides/prog_guide/index.html). Here I only sum up some main points about DPDK+OVS implementation and its difference in the evolution of IO virtualization.

In legacy vhost implementation, vhost is tied to kvm. However, in the DPDK implementation, it takes out vhost from kvm and make it run in a separate userspace next to qemu, it means that, vhost does not depend on kvm any more.

In vhost implementation, there is a virtqueue shared between qemu and guest. In the DPDK+OVS implementation, there is another virtqueue shared between OVS datapath and guest. Note that, from the viewpoint of DPDK, OVS is an application running on top of it. So, in comparison to vhost implementation in KVM, DPDK takes the vhost abstraction (in fact, it implements a virtio-net device in user space called vhost or user space vhost) out of KVM and let it runs in a separate user space.

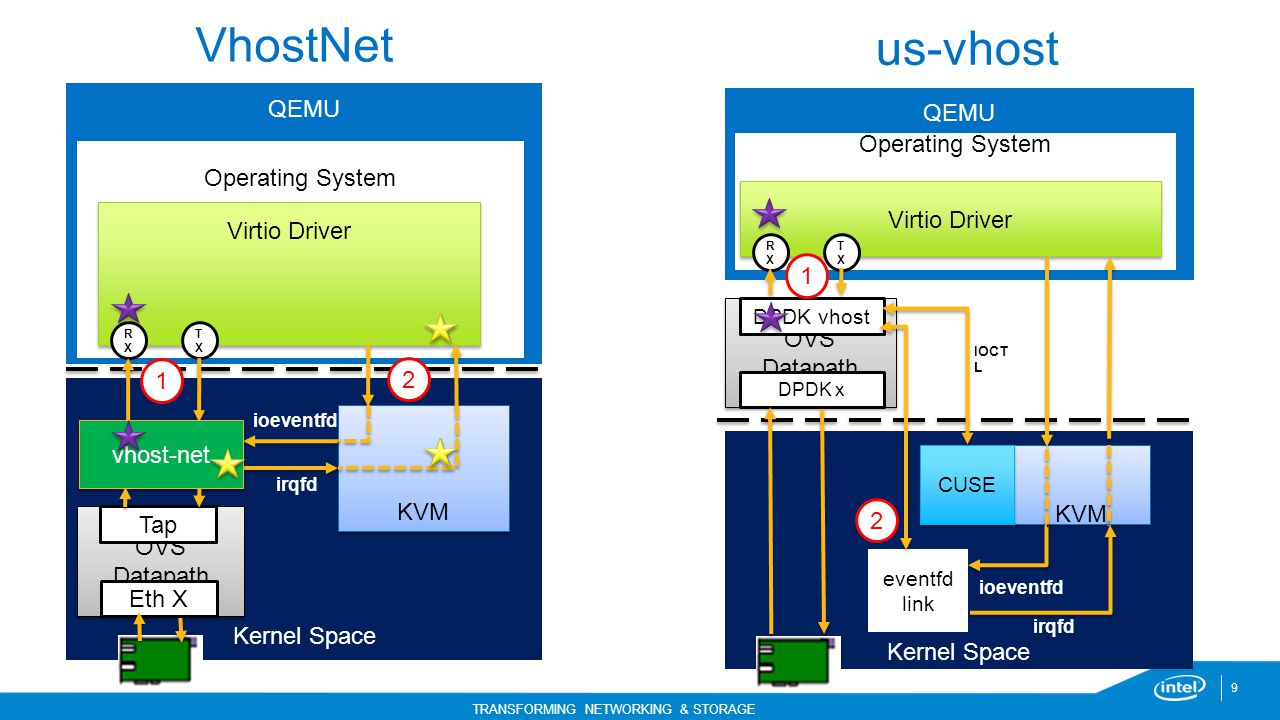

Picture3. Vhostnet and DPDK Implementation (Source: Intel)

In picture[3], it is clear about the difference of datapath between legacy vhost-net and DPDK implementation. In case of vhost-net (KVM+vhost), the datapath (usually called packet path for socket application) starts from user space of virtual machine, goes through kernel of virtual machine. Inside the kernel of virtual machine, data is stored in virtioqueues and is polled by vhost in KVM of host machine frequently. Then data is transferred to OVS datapath inside kernel of host machine and tied to physical device. In case of DPDK+OVS implementation, datapath from virtual machine is transferred to OVS datapath running in a separate user space next to qemu of host machine. Then DPDK transfers data to physical devices.

There are two methods of communicating between guest and host: IVSHMEM and Userspace vHost. More information about them is below: (Source: https://github.com/01org/dpdk-ovs/blob/development/docs/00_Overview.md)

IVSHMEM

Suggested use case: Virtual Appliance running Linux with an Intel® DPDK based application.

Zero copy between guest and switch.

Option when applications are trusted and highest small packet throughput required.

Option when applications do not need the Linux network stack.

Opportunity to add VM to VM security through additional buffer allocation (via

memcpy).Userspace vHost

Suggested use cases: Virtual Appliance running Linux with an Intel® DPDK based application, or a legacy VirtIO based application.

Option when applications are not trusted and highest small packet throughput required.

Option when applications either do or do not need the Linux network stack.

Single memcpy between guest and switch provides security.

Single memcpy for guest to guest provides security.

Option when using a modified QEMU version is not possible.

In Userspace vHost methods, there are two vhost implementations inside vhost library: vhost cuse and vhost user. More details can be seen in the below link: http://dpdk.org/doc/guides/prog_guide/vhost_lib.html

23/1/2016

VietStack

Comments